A client has asked me recently to look into why one of their editors was performing slowly. The editor was using a table to display a large imported data structure. The initial rendering of the table took around 6 seconds on my notebook, and typing a character caused the editor to pause for another 6 seconds, which was unacceptable. Considering that the actual language users would be using a VM as is often the case in big companies, the delay there could be even longer.

After some profiling I found several reasons for the low performance that I want to share.

Big roots render slowly

The initial rendering time increases with the size of the AST. MPS tries to cache parts of the editor once built and after the initial render it will only update the parts that require changes, but collecting the necessary dependency information to achieve this incrementality also takes time.

How big is too big? Thousands of descendants are going to take several seconds to render. For example, MPS-internal

class

_FunctionTypes

takes around 3 seconds to render on a powerful MacBook Pro. The tree contains 8835 nodes (as computed by evaluating

nodeRef.descendants<+>.size in the MPS Console).

What can you do about it?

It may be easier to avoid large roots than to solve the resulting editor problems. Consider splitting the content into many small root nodes and a “table of contents” node listing the small roots. Sometimes the latter node is not even required as you can query the model itself for its contents.

If you can’t split the content (as I couldn’t in my case), you will have to live with the slow initial rendering, but it may be possible to take advantage of the incremental rendering. Unless you use tables.

Tables disable incremental rendering

Using tables from MPS-extensions causes incremental updates to be disabled (as of version 2021.1). This means that the full table is going to be updated on any edit in any of the cells and we don’t take advantage of any incrementality built into MPS. This is of course very slow for large tables.

What can you do about it?

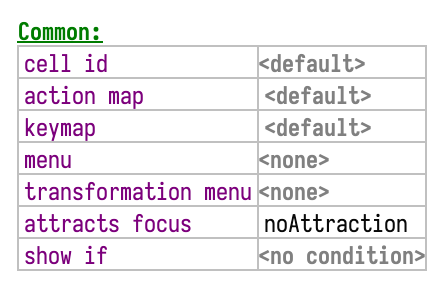

If your tables are simple grids, consider replacing them with a vertical grid layout with draw-border style. You may

have noticed it in the MPS inspector for editor cell models:

Example of a vertical grid layout

A collection with a vertical grid layout is supposed to contain child collections with horizontal layouts. It will then

lay out the child collections as table rows, adjusting each grandchild to take the same width as those above and below

it in the grid. The draw-border style around the entire vertical collection and around each grandchild will draw the

grid lines.

Watch out for celllayout

Using styles from the language de.itemis.mps.editor.celllayout.styles (such as _grow-x or _grid-layout-flatten) or

horizontal or vertical line cells from the language de.itemis.mps.editor.celllayout will cause a top-down layout

algorithm to be activated. In the words of Sascha Lißon, the author:

In the MPS layout algorithms the children decide about their width and height and the parent cell can only arrange the already layouted children. The algorithms from the

celllayoutlanguage are similar to the Swing layouters where the child is asked for its min/max/preferred size, but the parent decides about the size of the child and the child then has to fit itself into these bounds. This is used in tables and diagram, but also for the styles defined in thecelllayout.styleslanguage.

The top-down algorithm produces nice layouts and can, for example, draw a horizontal line for the full width of the editor, but profiling shows that this layout is about twice as slow as the original MPS layouts, and will also cause relayouts to happen more often. In one of my tests removing a horizontal line from the editor caused the relayout time to drop from 6×150 ms to 2× or 1×75 ms. This meant that the editing latency dropped from around a second to a more acceptable <100 ms. A hundred milliseconds is still quite a lot but we are talking about a table of around 1500 rows in this case.

What can you do about it?

Remove the celllayout lines and styles from the editors you are using and see if they perform better and whether the

different layout is acceptable.

Check the checking rules

Another potential source of sluggishness on big roots are checking rules. A rule may be written in a non-scalable way, employing an algorithm with quadratic or worse complexity. As a result, the rule that took a few milliseconds to run on a small root suddenly takes tens of seconds to finish on a large one.

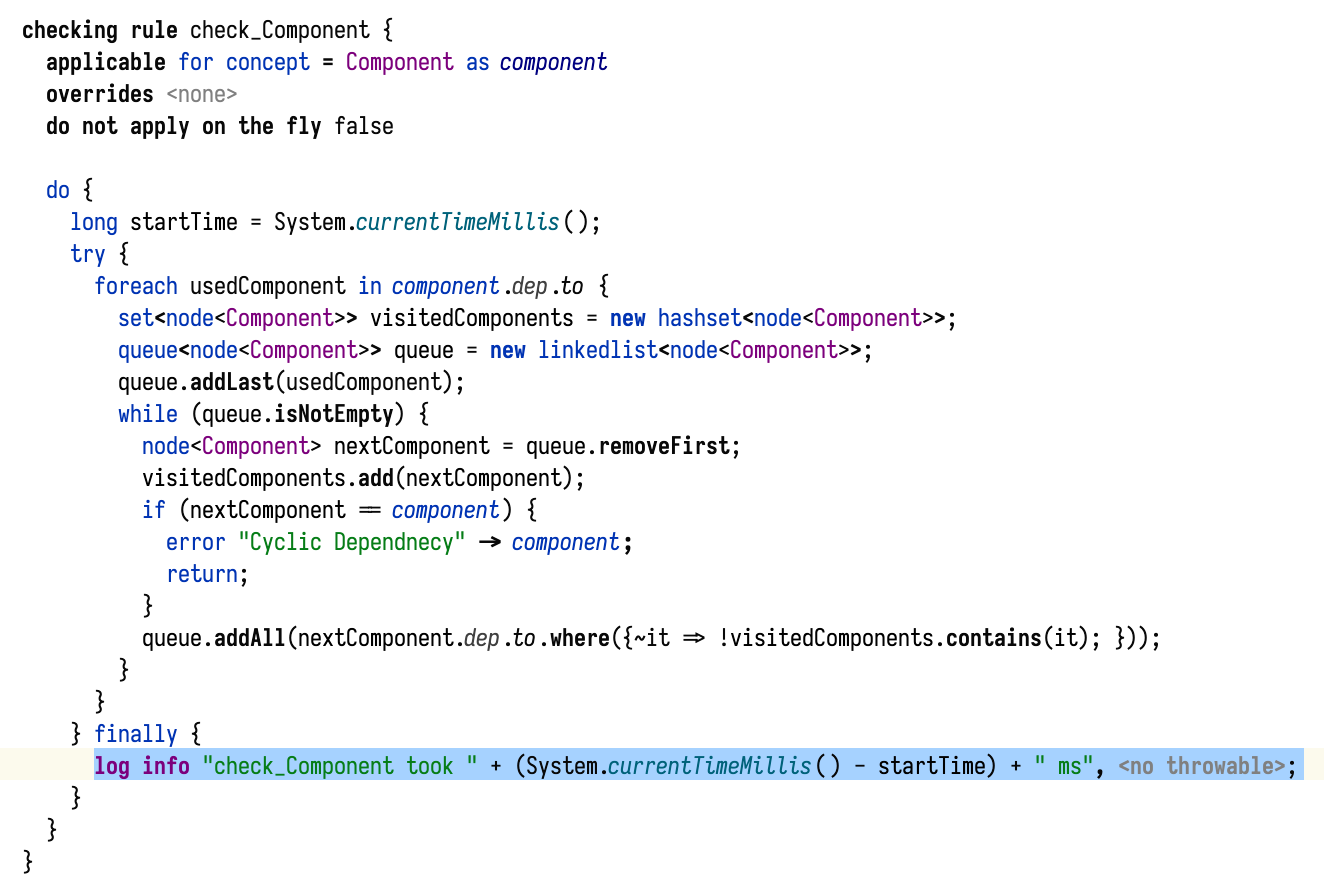

There isn’t any built-in support in MPS for profiling checking rules, but you can profile MPS itself using a JVM profiler or a Java IDE such as IntelliJ IDEA. If you suspect that a given rule is taking a long time, you can then confirm the suspicion by logging how much time it takes:

Logging the duration of a checking rule

What can you do about it?

Change the data structure or the algorithm

Sometimes, a simple change in the used data structure or algorithm can make the rule perform much better. For example,

when checking for uniqueness of child names (as check_ContainerOfUniqueNames does in mbeddr), a naive implementation

would look like this:

sequence<node<INamedConcept>> nodes = container.nodesThatShouldHaveUniqueNames();

foreach child in nodes {

if (nodes.any({~it => it != child && it.name :eq: child.name})) {

error "duplicate name: " + child.name -> child;

}

}

This algorithm has quadratic complexity because nodes.any may potentially traverse the entire collection and would run

very slowly on large nodes. A simple improvement would be to use a set of names seen so far, since set lookups are

almost constant.

set<string> names = new hashset<string>;

sequence<node<INamedConcept>> nodes = container.nodesThatShouldHaveUniqueNames();

foreach child in nodes {

if (names.contains(child.name)) {

error "duplicate name: " + child.name -> child;

}

names.add(child.name);

}

Split the rule

If a rule can be split into multiple rules, it will help keep the UI responsive since the typechecker would be able to cancel the background checking thread between the two rules. For example, if a rule that checks that inputs and outputs of each component are connected, you may be able to split it into two rules, one for inputs and another for outputs.

Parallelize the rule

If a rule checks something for each child (look for a foreach loop inside the rule), it may help to move the rule to the child. MPS will then check each child separately and cancel the checker in between two children, if necessary.